QCoro

QCoro is a C++ library that makes it possible to use C++20 coroutines with Qt. It provides the necessary tools to create coroutines as well as coroutine-friendly wrappers for native Qt types.

QCoro is a C++ library that makes it possible to use C++20 coroutines with Qt. It provides the necessary tools to create coroutines as well as coroutine-friendly wrappers for native Qt types.

I have been contributing to KDE for over a decade. I was involved in KDE Telepathy, KScreen and most prominently in KDE PIM. I am Akonadi maintainer and author or Google integration.

A Rust crate for Cargo build scripts that provides a simple way to find and use CMake package installed on the system.

An extension for the VS Code IDE that provides documentation for Qt classes and methods when you hover over them in the editor.

This release brings improvements to generators, better build system integration and several bugfixes.

As always, big thanks to everyone who reported issues and contributed to QCoro. Your help is much appreciated!

The biggest improvement in this release is that QCoro::AsyncGenerator coroutines now support

directly co_awaiting Qt types without the qCoro() wrapper, just like QCoro::Task coroutines

already do (#292).

Previously, if you wanted to await a QNetworkReply inside an AsyncGenerator, you had to

wrap it with qCoro():

QCoro::AsyncGenerator<QByteArray> fetchPages(QNetworkAccessManager &nam, QStringList urls) {

for (const auto &url : urls) {

auto *reply = co_await qCoro(nam.get(QNetworkRequest{QUrl{url}}));

co_yield reply->readAll();

}

}

Starting with QCoro 0.13.0, you can co_await directly, just like in QCoro::Task:

QCoro::AsyncGenerator<QByteArray> fetchPages(QNetworkAccessManager &nam, QStringList urls) {

for (const auto &url : urls) {

auto *reply = co_await nam.get(QNetworkRequest{QUrl{url}});

co_yield reply->readAll();

}

}

.end() method is now const (and constexpr), so it can be called on const generator objects (#294).GeneratorIterator can now be constructed in an invalid state, allowing lazy initialization of iterators (#318).qcoro.h now only includes QtNetwork and QtDBus headers when those features are actually enabled, resulting in cleaner builds when optional modules are disabled (#280).If you enjoy using QCoro, consider supporting its development on GitHub Sponsors or buy me a coffee on Ko-fi (after all, more coffee means more code, right?).

I wasted entire afternoon today trying to figure this out, so here’s a quick note for future reference - hopefully to save someone else’s afternoon.

Today, I was working on adding Rust bindings for an internal C++ library. The C++ library itself is built using CMake and produces a statically-linked library, let’s call it libfoo.a.

I used the cxx crate to generate the glue code between Rust and the C++ library, wrote the higher-level Rust API and ran cargo build. The crate built just fine, so I moved on to integrating it into our larger Rust project. However, when I tried to build the project, I got a linker error:

note: rust-lld: error: undefined symbol: url_to_json[abi:cxx11](std::basic_string_view<char, std::char_traits<char>> const&)

I double-checked the build.rs script of the bindings crate to ensure that libfoo.a was being linked. It was. I then used nm to confirm that the symbol actually exists in libfoo.a. It did. So what now? I spent a lot of time googling around, trying different “hacks” in the build.rs script and other sorcery. I had a temporary success by adding complex build.rs into the consumer project, but that wasn’t really a scalable solution.

The most confusing part was that I used the exact same approach and code to create bindings for another our C++ library a while ago, and there it all just worked. No special linker flags, no magical cargo incantations, no build.rs in projects that used the bindings crate. What was different this time?

After literally hours of trial and error and out of desperation, I decided to objdump the libfoo.a to look at the disassembly of the problematic url_to_json function. I am far from an assembly expert, but the disassembled output looked very suspicious, even to my untrained eye.

0000000000000000 <.gnu.lto__Z11url_to_jsonB5cxx11RKSt17basic_string_viewIcSt11char_traitsIcEE.1350.d0d92fceb60052fc>:

0: 28 b5 2f fd 60 72 sub %dh,0x7260fd2f(%rbp)

6: 02 25 12 00 b6 a0 add -0x5f49ffee(%rip),%ah

c: 71 46 jno 54 <.gnu.lto__Z11url_to_jsonB5cxx11RKSt17basic_string_viewIcSt11char_traitsIcEE.1350.d0d92fceb60052fc+0x54>

e: e0 d0 loopne ffffffffffffffe0

10: 36 1d f8 c7 f0 69 ss sbb $0x69f0c7f8,%eax

16: 6c insb (%dx),%es:(%rdi)

17: 81 05 c4 c0 e0 96 61 addl $0x149c4e61,-0x691f3f3c(%rip)

1e: 4e 9c 14

21: a0 ad c0 3f f4 3e de movabs 0x102dde3ef43fc0ad,%al

28: 2d 10

2a: a4 movsb %ds:(%rsi),%es:(%rdi)

2b: 61 (bad)

(truncated)

Those instructions don’t look sensible at all, especially when compared to what the actual C++ code looks like. And what about the “bad” instruction? It’s not like I haven’t run into miscompilations before, but this was much more than that.

At this point, I consulted the situation with an AI, and the answer was clear:

When working with static libraries and Clang, issues with assembly output from tools like objdump can arise, particularly when Link-Time Optimization (LTO) is enabled.

Wait! Did it say LTO? The static library is definitely built with LTO (it’s even in the name of the symbol). Quick check into the library’s CMakeLists.txt and bingo: LTO is enabled by default. I disabled it and…the consumer project built and linked without a single error. Problem solved.

My (shallow) understanding of LTO has always been that it’s just a special optimizer pass at link time, when the linker can see the final executable (or shared library) as a whole, and can perform optimizations across translation units and more efficiently eliminate unused code. A static library is just a collection of object files, so LTO should not really play any role here, right?

The reality is that with LTO enabled, compilers “cheat” (yes, compilers - GCC does this as well), and instead of producing object files with the final machine code, they produce object-like files that contain the intermediate representation (IR) of the code. IR (GCC calls it GIR) is a compiler-specific representation of the code during the compilation process. It’s no longer the original source code, but it’s not the final machine code either. Having access to the IR allows the linker to perform advanced optimizations that wouldn’t be possible if it only had access to the final machine code. The final codegen that emits the executable machine code happens after the optimization pass.

This entire process is actually described quite nicely in the LLVM documentation about LTO - which is an information that is useful only when you know that you need it :-)

However, if the libfoo.a contained LLVM IR, and rustc also uses LLVM (and supports LTO on its own), why didn’t it Just Work™? The problem is that Rust will only perform LTO between Rust crates by default.

cxxLuckily, it’s possible to coerce the Rust compiler to perform cross-language LTO, but if you are using the cxx crate, there’s an extra step involved.

This is what ultimately worked for me, and allowed me to build both without LTO in debug mode and with LTO in release mode.

In your build.rs, you must make sure that the generated bridge code is also compiled with LTO enabled (when needed):

let enable_lto = std::env::var("PROFILE").unwrap_or_default() == "release";

// Compile the C++ library

cmake::Config::new(".")

.define("ENABLE_LTO", if enable_lto { "ON" } else { "OFF" })

.build_target("all");

// Build the cxx bridge

let mut bridge = cxx_build::bridge("src/bridge.rs")

.file("src/bridge.cpp")

// ... additional includes paths, source files, flags, etc.

;

if enable_lto {

// Enable LTO for the bridge compilation as well

bridge.flag_if_supported("-flto");

}

bridge.compile("foo_bridge");

And to compile it. Just make sure you use Clang for building the C++ code as well (the cc crate inside cxx should pick this up automatically from the environment variables):

# Make sure to use clang!

export CXX=clang++

export CC=clang

# Enable LTO linker plugin

export RUSTFLAGS="-Clinker-plugin-lto"

# Let's gooooo

cargo build --release

An important requirement is that the C/C++ code must be compiled with a version of Clang that is compatible with the LLVM version used by rustc. The compatibility matrix is documented in the rustc book. In my case, I was using rustc 1.90 (LLVM 20.1.8) and Clang 20.1.8.

If you ever run into undefined reference linker errors when trying to link static C or C++ libraries with your Rust code, double check whether the static library was built with LTO enabled, and make sure to enable cross-language LTO in your Rust build as well.

I started this blog back in 2010. Back then I used Wordpress and it worked reasonably well. In 2018 I decided to switch to a static generated site, mostly because the Wordpress blog felt slow to load and it was hassle to maintain. Back then the go-to static site generator was Jekyll, so I went with that. Lately I’ve been struggling with it though, because in order to keep all the plugins working, I needed to use older versions or Ruby, which meant I had to use Docker to build the blog locally. Overall, it felt like too much work and for the past few years I’ve been eyeing Hugo - more so since Carl and others migrated most of KDE websites to it. I mean, if it’s good enough for KDE, it’s good enough for me, right?

So this year I finally got around to do the switch. I migrated all the content from Jekyll. This time I actually went through every single post, converted it to proper Markdown, fixed formatting, images etc. It was a nice trip down the memory lane, reading all the old posts, remembering all the sprints and Akademies… I also took the opportunity to clean up the tags and categories, so that they are more consistent and useful.

Finally, I have rewritten the theme - I originally ported the template from Wordpress to Jekyll, but it was a bit of a mess, responsivity was “hacked” in via JavaScript. Web development (and my skills) has come a long way since then, so I was able to leverage more modern CSS and HTML features to make the site look the same, but be more responsive and accessible.

When I switched from Wordpress to Jekyll, I was looking for a way to preserve comments. I found Isso, which is basically a small CGI server backed with SQLite that you can run on the server and embed it into your static website through JavaScript. It could also natively import comments from Wordpress, so that’s the main reason why I went with it, I think. Isso was not perfect (although the development has picked up again in the past few years) and it kept breaking for me. I think it haven’t worked for the past few years on my blog and I just couldn’t be bothered to fix it. So, I decided to ditch it in favor of another solution…

I wanted to keep the comments for old posts by generating them as static HTML from the Isso’s SQLite database, alas the database file was empty. Looks like I lost all comments at some point in 2022. It sucks, but I guess it’s not the end of the world. Due to the nature of how Isso worked, not even the Wayback Machine was able to archive the comments, so I guess they are lost forever…

For this new blog, I decided to use Carl’s approach with embedding replies to a Mastodon. I think it’s a neat idea and it’s probably the most reliable solution for comments on a static blog (that I don’t have to pay for, host myself or deal with privacy concerns or advertising).

I have some more ideas regarding the comments system, but that’s for another post ;-) Hopefully I’ll get to blog more often now that I have a shiny new blog!

Enjoy the holidays and see you in 2025 🥳!

A long over-due release which has accumulated a bunch of bugfixes but also some fancy new features…read on!

As always, big thanks to everyone who reported issues and contributed to QCoro. Your help is much appreciated!

QCoro::LazyTask<T>The biggest new features in this release is the brand-new QCoro::LazyTask<T>.

It’s a new return type that you can use for your coroutines. It differs from QCoro::Task<T>

in that, as the name suggest, the coroutine is evaluated lazily. What that means is when

you call a coroutine that returns LazyTask, it will return imediately without executing

the body of the coroutine. The body will be executed only once you co_await on the returned

LazyTask object.

This is different from the behavior of QCoro::Task<T>, which is eager, meaning that it will

start executing the body immediately when called (like a regular function call).

QCoro::LazyTask<int> myWorker()

{

qDebug() << "Starting worker";

co_return 42;

}

QCoro::Task<> mainCoroutine()

{

qDebug() << "Creating worker";

const auto task = myWorker();

qDebug() << "Awaiting on worker";

const auto result = co_await task;

// do something with the result

}

This will result in the following output:

mainCoroutine(): Creating worker

mainCoroutine(): Awaiting on worker

myWorker(): Starting worker

If myWorker() were a QCoro::Task<T> as we know it, the output would look like this:

mainCoroutine(): Creating worker

myWorker(): Starting worker

mainCoroutine(): Awaiting on worker

The fact that the body of a QCoro::LazyTask<T> coroutine is only executed when co_awaited has one

very important implication: it must not be used for Qt slots, Q_INVOKABLEs or, in general, for any

coroutine that may be executed directly by the Qt event loop. The reason is, that the Qt event loop

is not aware of coroutines (or QCoro), so it will never co_await on the returned QCoro::LazyTask

object - which means that the code inside the coroutine would never get executed. This is the

reason why the good old QCoro::Task<T> is an eager coroutine - to ensure the body of the coroutine

gets executed even when called from the Qt event loop and not co_awaited.

For more details, see the documentation of QCoro::LazyTask<T>.

This is something that wasn’t clearely defined until now (both in the docs and in the code), which is

what happens when you try to co_await on a default-constructed QCoro::Task<T> (or QCoro::LazyTask<T>):

co_await QCoro::Task<>(); // will hang indefinitely!

Previously this would trigger a Q_ASSERT in debug build and most likely a crash in production build.

Starting with QCoro 0.11, awaiting such task will print a qWarning() and will hang indefinitely.

The same applies to awaiting a moved-from task, which is identical to a default-constructed task:

QCoro::LazyTask<int> task = myTask();

handleTask(std::move(task));

co_await task; // will hang indefinitely!`

We have dropped official support for older compilers. Since QCoro 0.11, the officially supported compilers are:

QCoro might still compile or work with older versions of those compilers, but we no longer test it and do not guarantee that it will work correctly.

The reason is that coroutine implementation in older versions of GCC and clang were buggy and behaved differently than they do in newer versions, so making sure that QCoro behaves correctly across wide range of compilers was getting more difficult as we implemented more and more complex and advanced features.

A coroutine-friendly version of QFuture::takeResult() is now available in the

form of QCoroFuture::takeResult() when building QCoro against Qt 6 (#217).

QCoro::waitFor(QCoro::Task<T>) no longer requires that the task return type T is default-constructible (#223, Joey Richey)

QCoroIODevice::write() always returning 0 instead of bytes written (#211, Daniel Vrátil)std::optional access in QCoroIODevice::writeqCoro() would resume the awaiter in the sender’s thread context (#213, Daniel Vrátil)#include <exception> (#220, Micah Terhaar)QNetworkAccessManager is destroyed from a coroutine awaiting on a network reply (#231, Daniel Vrátil)If you enjoy using QCoro, consider supporting its development on GitHub Sponsors or buy me a coffee on Ko-fi (after all, more coffee means more code, right?).

In 2021 I decided to take a break from contributing to KDE, since I felt that I’ve been losing motivation and energy to contribute for a while… But I’ve been slowly getting back to hacking on KDE stuff for the past year, which ended in me going to Toulouse this year to attend the annual KDE PIM Sprint, my first in 5 years.

I’m very happy to say that we have /a lot/ going on in PIM, and even though not everything is in the best shape and the community is quite small (there were only four of us at the sprint), we have great plans for the future, and I’m happy to be part of it.

The sprint was officially supposed to start on Saturday, but everyone arrived already on Friday, so why wait? We wrote down the topics to discuss, put them on a whiteboard and got to it.

We’ve managed to discuss some pretty important topics - how we want to proceed with deprecation and removal of some components, how to improve our test coverage or how to improve indexing and much much more.

I arrived to the sprint with two big topics to discuss: milestones and testing:

The idea is to create milestones for all our bigger efforts that we work (or want to work) on. The milestones should be concrete goals that are achievable within a reasonable time frame and have clear definition of done. Each milestones should then be split to smaller tasks that can be tackled by individuals. We hope that this will help to make KDE PIM more attractive to new contributors, who can now clearly see what is being worked on and can find very concrete, bite-sized tasks to work on.

As a result, we took all the ongoing tasks and turned most of them into milestones in Gitlab. It’s still very much work in progress, we still need to break down many milestones to smaller tasks, but the general ideas are out there.

Akonadi Resources provide “bridge” between Akonadi Server and individual services, like IMAP servers, DAV servers, Google Calendar etc. But we have no tests to verify that our Resources can talk to the services and vice versa. The plan is to create a testing framework (in Python) so that we can have automated nightly tests to verify that e.g. IMAP resource interfaces properly with common IMAP server implementations, including major proprietary ones like Gmail or Office365. We want to achieve decent coverage for all our resources. This is a big project, but I think it’s a very exciting one as it includes not just programming, but also figuring out and building some infrastructure to run e.g. Dovecot, NextCloud and others in a Docker to test against.

On Saturday we started quite early, all the delicious french pastry is not going to eat itself, is it? After breakfast we continued with discussions, we dicussed tags support, how to improve our PR. But we also managed to produce some code. I implemented syncing of iCal categories with Akonadi tags, so the tags are becoming more useful. I also prepared Akonadi to be cleanly handle planned deprecation and retirement of KJots, KNotes and their acompanying resources, as well as planned removal of the Akonadi Kolab Resource (in favor of using IMAP+DAV).

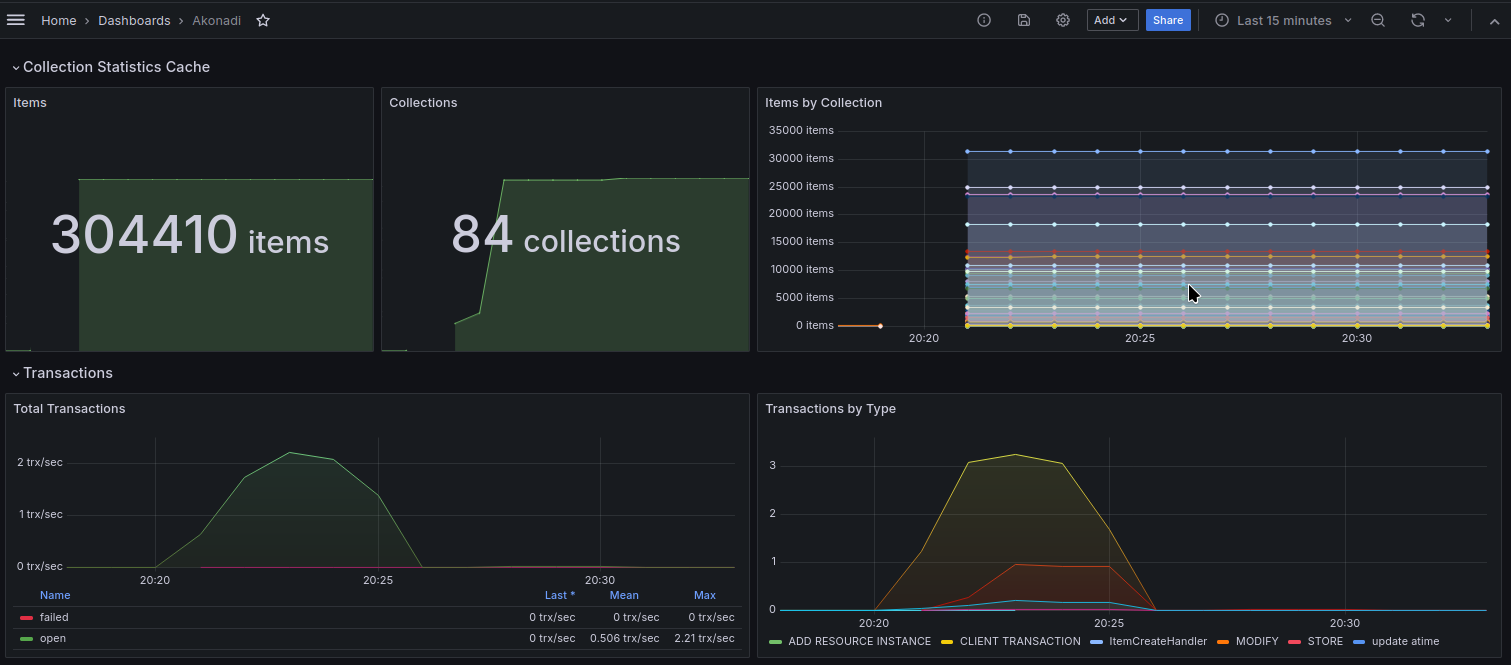

One of the tasks I want to look into is improving how we do database transactions in the Akonadi Server. To get some data out of it, I shoved Prometheus exporter into Akonadi, hooked it up to a local Prometheus service, thrown together a Grafana dashboard, and here we are:

We decided to order some pizzas for dinner and stayed at the venue hacking until nearly 11 o’clock.

On the last day of the sprint we wrapped up on the discussions and focused on actually implementing some of the ideas. I spent most of the time extending the Migration agent to extract tags from all existing events and todos already stored in Akonadi and helped to create some of the milestones on the Gitlab board. We also came up with a plan for KDE PIM BoF on this years Akademy, where we want to present out progress on the respective milestones and to give a chance to contributors to learn what are the biggest hurdles they are facing when trying to contribute to KDE PIM and how we can help make it easier for them to get involved.

I think it was a very productive sprint and I am really excited to be involved in PIM again. Can’t wait to meet up with everyone again on Akademy in September.

Go check out Kevin’s and Carl’s reports to see what else have they been up to during the sprint.

Did some of the milestones caught your eye, or do you have have any questions? Come talk to us in our matrix channel.

Finally, many thanks to Kevin for organizing the sprint, Étincelle Coworking for providing us with nice and spacious venue and KDE e.V. for supporting us on travel.

Finally, if you like such meetings to happen in the future so that we can push forward your favorite software, please consider making a tax-deductible donation to the KDE e.V. foundation.

Thank you to everyone who reported issues and contributed to QCoro. Your help is much appreciated!

Qt has a feature where signals can be made “private” (in the sense that only class

that defines the signal can emit it) by appending QPrivateSignal argument to the

signal method:

class MyObject : public QObject {

Q_OBJECT

...

Q_SIGNALS:

void error(int code, const QString &message, QPrivateSignal);

};

QPrivateSignal is a type that is defined inside the Q_OBJECT macro, so it’s

private and as such only MyObject class can emit the signal, since only MyObject

can instantiate QPrivateSignal:

void MyObject::handleError(int code, const QString &message)

{

Q_EMIT error(code, message, QPrivateSignal{});

}

QCoro has a feature that makes it possible to co_await a signal emission and

returns the signals arguments as a tuple:

MyObject myObject;

const auto [code, message] = co_await qCoro(&myObject, &MyObject::handleError);

While it was possible to co_await a “private” signal previously, it would get

return the QPrivateSignal value as an additional value in the result tuple

and on some occasions would not compile at all.

In QCoro 0.10, we can detect the QPrivateSignal argument and drop it inside QCoro

so that it does not cause trouble and does not clutter the result type.

Achieving this wasn’t simple, as it’s not really possible to detect the type (because

it’s private), e.g. code like this would fail to compile, because we are not allowed

to refer to Obj::QPrivateSignal, since that type is private to Obj.

template<typename T, typename Obj>

constexpr bool is_qprivatesignal = std::same_as_v<T, typename Obj::QPrivateSignal>;

After many different attempts we ended up abusing __PRETTY_FUNCTION__

(and __FUNCSIG__ on MSVC) and checking whether the function’s name contains

QPrivateSignal string in the expected location. It’s a whacky hack, but hey - if it

works, it’s not stupid :). And thanks to improvements in compile-time evaluation in

C++20, the check is evaluated completely at compile-time, so there’s no runtime

overhead of obtaining current source location and doing string comparisons.

Big part of QCoro are template classes, so there’s a lot of code in headers. In my opinion, some of the files (especially qcorotask.h) were getting hard to read and navigate and it made it harder to just see the API of the class (like you get with non-template classes), which is what users of a library are usually most interested in.

Therefore I decided to move definitions into separated files, so that they don’t clutter the main include files.

This change is completely source- and binary-compatible, so QCoro users don’t have to make any changes to their code. The only difference is that the main QCoro headers are much prettier to look at now.

QCoro::waitFor() now re-throws exceptions (#172, Daniel Vrátil)QWebSocket::error with QWbSocket::errorOccured in QCoroWebSockets module (#174, Marius P)QCoro::connect() not working with lambdas (#179, Johan Brüchert)std::coroutine_traits isn't a class template error with LLVM 16 (#196, Rafael Sadowski)This is a rather small release with only two new features and one small improvement.

Big thank you to Xstrahl Inc. who sponsored development of new features included in this release and of QCoro in general.

And as always, thank you to everyone who reported issues and contributed to QCoro. Your help is much appreciated!

The original release announcement on qcoro.dvratil.cz.

QCoro::waitFor()Up until this version, QCoro::waitFor() was only usable for QCoro::Task<T>.

Starting with QCoro 0.8.0, it is possible to use it with any type that satisfies

the Awaitable concept. The concept has also been fixed to satisfies not just

types with the await_resume(), await_suspend() and await_ready() member functions,

but also types with member operator co_await() and non-member operator co_await()

functions.

QCoro::sleepFor() and QCoro::sleepUntil()Working both on QCoro codebase as well as some third-party code bases using QCoro it’s clear that there’s a usecase for a simple coroutine that will sleep for specified amount of time (or until a specified timepoint). It is especially useful in tests, where simulating delays, especially in asynchronous code is common.

Previously I used to create small coroutines like this:

QCoro::Task<> timer(std::chrono::milliseconds timeout) {

QTimer timer;

timer.setSingleShot(true);

timer.start(timeout);

co_await timer;

}

Now we can do the same simply by using QCoro::sleepFor().

Read the documentation for QCoro::sleepFor()

and QCoro::sleepUntil() for more details.

QCoro::moveToThread()A small helper coroutine that allows a piece of function to be executed in the context of another thread.

void App::runSlowOperation(QThread *helperThread) {

// Still on the main thread

ui->statusLabel.setText(tr("Running"));

const QString input = ui->userInput.text();

co_await QCoro::moveToThread(helperThread);

// Now we are running in the context of the helper thread, the main thread is not blocked

// It is safe to use `input` which was created in another thread

doSomeComplexCalculation(input);

// Move the execution back to the main thread

co_await QCoro::moveToThread(this->thread());

// Runs on the main thread again

ui->statusLabel.setText(tr("Done"));

}

Read the documentation for QCoro::moveToThread for more details.

The major new feature in this release is initial QML support, contributed by

Jonah Brüchert. Jonah also contributed QObject::connect helper and

a coroutine version of QQuickImageProvider. As always, this release includes

some smaller enhancements and bugfixes, you can find a full list of them

on the Github release page.

As always, big thank you to everyone who report issues and contributed to QCoro. Your help is much appreciated!

Jonah Brüchert has contributed initial support for QML. Unfortunately, we

cannot extend the QML engine to support the async and await keywords from

ES8, but we can make it possible to set a callback from QML that should be

called when the coroutine finishes.

The problem with QCoro::Task is that it is a template class so it cannot be

registered into the QML type system and used from inside QML. The solution

that Jonach has come up with is to introduce QCoro::QmlTask class, which

can wrap any awaitable (be it QCoro::Task or any generic awaitable type)

and provides a then() method that can be called from QML and that takes

a JavaScript function as its only argument. The function will be invoked by

QCoro::QmlTask when the wrapped awaitable has finished.

The disadvantage of this approach is that in order to expose a class that

uses QCoro::Task<T> as return types of its member functions into QML, we

need to create a wrapper class that converts those return types to

QCoro::QmlTask.

Luckily, we should be able to provide a smoother user experience when using QCoro in QML for Qt6 in a future QCoro release.

class QmlCoroTimer: public QObject {

Q_OBJECT

public:

explicit QmlCoroTimer(QObject *parent = nullptr)

: QObject(parent)

{}

Q_INVOCABLE QCoro::QmlTask start(int milliseconds) {

// Implicitly wraps QCoro::Task<> into QCoro::QmlTask

return waitFor(milliseconds);

}

private:

// A simple coroutine that co_awaits a timer timeout

QCoro::Task<> waitFor(int milliseconds) {

QTimer timer;

timer.start(milliseconds);

co_await timer;

}

};

...

QCoro::Qml::registerTypes();

qmlRegisterType<QmlCoroTimer>("cz.dvratil.qcoro.example", 0, 1);

import cz.dvratil.qcoro.example 1.0

Item {

QmlCoroTimer {

id: timer

}

Component.onCompleted: () {

// Attaches a callback to be called when the QmlCoroTimer::waitFor()

// coroutine finishes.

timer.start(1000).then(() => {

console.log("1 second elapsed!");

});

}

}

Read the documentation for QCoro::QmlTask for more details.

The QCoro::connect() helper is similar to QObject::connect() - except you

you pass in a QCoro::Task<T> instead of a sender and signal pointers. While

using the .then() continuation can achieve similar results, the main

difference is that QCoro::connect() takes a pointer to a context (receiver)

QObject. If the receiver is destroyed before the connected QCoro::Task<T>

finishes, the slot is not invoked.

void MyClass::buttonClicked() {

QCoro::Task<QByteArray> task = sendNetworkRequest();

// If this object is deleted before the `task` completes,

// the slot is not invoked.

QCoro::connect(std::move(task), this, &handleNetworkReply);

}

See the QCoro documentation for more details.

I’m pleased to announce release 0.6.0 of QCoro, a library that allows using C++20 coroutines with Qt. This release brings several major new features alongside a bunch of bugfixes and improvements inside QCoro.

The four major features are:

🎉 Starting with 0.6.0 I no longer consider this library to be experimental (since clearly the experiment worked :-)) and its API to be stable enough for general use. 🎉

As always, big thank you to everyone who report issues and contributed to QCoro. Your help is much appreciated!

Unlike regular functions (or QCoro::Task<>-based coroutines) which can only ever

produce at most single result (through return or co_return statement), generators

can yield results repeatedly without terminating. In QCoro we have two types of generators:

synchronous and asynchronous. Synchronous means that the generator produces each value

synchronously. In QCoro those are implemented as QCoro::Generator<T>:

// A generator that produces a sequence of numbers from 0 to `end`.

QCoro::Generator<int> sequence(int end) {

for (int i = 0; i <= end; ++i) {

// Produces current value of `i` and suspends.

co_yield i;

}

// End the iterator

}

int sumSequence(int end) {

int sum = 0;

// Loops over the returned Generator, resuming the generator on each iterator

// so it can produce a value that we then consume.

for (int value : sequence(end)) {

sum += value;

}

return sum;

}

The Generator interface implements begin() and end() methods which produce an

iterator-like type. When the iterator is incremented, the generator is resumed to yield

a value and then suspended again. The iterator-like interface is not mandated by the C++

standard (the C++ standard provides no requirements for generators), but it is an

intentional design choice, since it makes it possible to use the generators with existing

language constructs as well as standard-library and Qt features.

You can find more details about synchronous generators in the QCoro::Generator<T>

documentation.

Asynchronous generators work in a similar way, but they produce value asynchronously,

that is the result of the generator must be co_awaited by the caller.

QCoro::AsyncGenerator<QUrl> paginator(const QUrl &baseUrl) {

QUrl pageUrl = baseUrl;

Q_FOREVER {

pageUrl = co_await getNextPage(pageUrl); // co_awaits next page URL

if (pageUrl.isNull()) { // if empty, we reached the last page

break; // leave the loop

}

co_yield pageUrl; // finally, yield the value and suspend

}

// end the generator

}

QCoro::AsyncGenerator<QString> pageReader(const QUrl &baseUrl) {

// Create a new generator

auto generator = paginator(baseUrl);

// Wait for the first value

auto it = co_await generator.begin();

auto end = generator.end();

while (it != end) { // while the `it` iterator is valid...

// Asynchronously retrieve the page content

const auto content = co_await fetchPageContent(*it);

// Yield it to the caller, then suspend

co_yield content;

// When resumed, wait for the paginator generator to produce another value

co_await ++it;

}

}

QCoro::Task<> downloader(const QUrl &baseUrl) {

int page = 1;

// `QCORO_FOREACH` is like `Q_FOREACH` for asynchronous iterators

QCORO_FOREACH(const QString &page, pageReader(baseUrl)) {

// When value is finally produced, write it to a file

QFile file(QStringLiteral("page%1.html").arg(page));

file.open(QIODevice::WriteOnly);

file.write(page);

++page;

}

}

Async generators also have begin() and end() methods which provide an asynchronous

iterator-like types. For one, the begin() method itself is a coroutine and must be

co_awaited to obtain the initial iterator. The increment operation of the iterator

must then be co_awaited as well to obtain the iterator for the next value.

Unfortunately, asynchronous iterator cannot be used with ranged-based for loops, so

QCoro provides QCORO_FOREACH macro to make using asynchronous generators simpler.

Read the documentation for QCoro::AsyncGenerator<T> for more details.

The QCoroWebSockets module provides QCoro wrappers for QWebSocket and QWebSocketServer

classes to make them usable with coroutines. Like the other modules, it’s a standalone

shared or static library that you must explicitly link against in order to be able to use

it, so you don’t have to worry that QCoro would pull websockets dependency into your

project if you don’t want to.

QCoro::Task<> ChatApp::handleNotifications(const QUrl &wsServer) {

if (!co_await qCoro(mWebSocket).open(wsServer)) {

qWarning() << "Failed to open websocket connection to" << wsServer << ":" << mWebSocket->errorString();

co_return;

}

qDebug() << "Connected to" << wsServer;

// Loops whenever a message is received until the socket is disconnected

QCORO_FOREACH(const QString &rawMessage, qCoro(mWebSocket).textMessages()) {

const auto message = parseMessage(rawMessage);

switch (message.type) {

case MessageType::ChatMessage:

handleChatMessage(message);

break;

case MessageType::PresenceChange:

handlePresenceChange(message);

break;

case MessageType::Invalid:

qWarning() << "Received an invalid message:" << message.error;

break;

}

}

}

The textMessages() methods returns an asynchronous generator, which yields the message

whenever it arrives. The messages are received and enqueued as long as the generator

object exists. The difference between using a generator and just co_awaiting the next

emission of the QWebSocket::textMessage() signal is that the generator holds a connection

to the signal for its entire lifetime, so no signal emission is lost. If we were only

co_awaiting a singal emission, any message that is received before we start co_awaiting

again after handling the current message would be lost.

You can find more details about the QCoroWebSocket and QCoroWebSocketSever

in the QCoro’s websocket module documentation.

You can build QCoro without the WebSockets module by passing -DQCORO_WITH_QTWEBSOCKETS=OFF

to CMake.

The task.h header and it’s camelcase variant Task been deprecated in QCoro 0.6.0

in favor of qcorotask.h (and QCoroTask camelcase version). The main reasons are to

avoid such a generic name in a library and to make the name consistent with the rest of

QCoro’s public headers which all start with qcoro (or QCoro) prefix.

The old header is still present and fully functional, but including it will produce a

warning that you should port your code to use qcorotask.h. You can suppress the warning

by defining QCORO_NO_WARN_DEPRECATED_TASK_H in the compiler definitions:

CMake:

add_compiler_definitions(QCORO_NO_WARN_DEPRECATED_TASK_H)

QMake

DEFINES += QCORO_NO_WARN_DEPRECATED_TASK_H

The header file will be removed at some point in the future, at latest in the 1.0 release.

You can also pass -DQCORO_DISABLE_DEPRECATED_TASK_H=ON to CMake when compiling QCoro

to prevent it from installing the deprecated task.h header.

The clang compiler is fully supported by QCoro since 0.4.0. This version of QCoro intruduces supports for clang-cl and apple-clang.

Clang-cl is a compiler-driver that provides MSVC-compatible command line options, allowing to use clang and LLVM as a drop-in replacement for the MSVC toolchain.

Apple-clang is the official build of clang provided by Apple on MacOS, which may be different from the upstream clang releases.

<chrono> include (#82)QCoroFwd header with forward-declarations of relevant types (#71)task.h header file in favor of qcorotask.h (#70)You can download QCoro 0.6.0 here or check the latest sources on QCoro GitHub.

If you are interested in learning more about QCoro, go read the documentation, look at the first release announcement, which contains a nice explanation and example or watch recording of my talk about C++20 coroutines and QCoro this years’ Akademy.

After another few months I’m happy to announce a new release of QCoro, which brings several new features and a bunch of bugfixes.

Task<T>Task<T>QThreadSometimes it’s not possible to co_await a coroutine - usually because you need to integrate with a 3rd party code

that is not coroutine-ready. A good example might be implementing QAbstractItemModel, where none of the virtual

methods are coroutines and thus it’s not possible to use co_await in them.

To still make it possible to all coroutines from such code, QCoro::Task<T> now has a new method: .then(),

which allows attaching a continuation callback that will be invoked by QCoro when the coroutine represented

by the Task finishes.

void notACoroutine() {

someCoroutineReturningQString().then([](const QString &result) {

// Will be invoked when the someCoroutine() finishes.

// The result of the coroutine is passed as an argument to the continuation.

});

}

The continuation itself might be a coroutine, and the result of the .then() member function is again a Task<R>

(where R is the return type of the continuation callback), so it is possible to chain multiple continuations

as well as co_awaiting the entire chain.

Task<T>Up until now each operation from the QCoro wrapper types returned a special awaitable - for example,

QCoroIODevice::read() returned QCoro::detail::QCoroIODevice::ReadOperation. In most cases users of QCoro do

not need to concern themselves with that type, since they can still directly co_await the returned awaitable.

However, it unnecessarily leaks implementation details of QCoro into public API and it makes it harded to return a coroutine from a non-coroutine function.

As of QCoro 0.5.0, all the operations now return Task<T>, which makes the API consistent. As a secondary effect,

all the operations can have a chained continuation using the .then() continuation, as described above.

Qt doesn’t allow specifying timeout for many operations, because they are typically non-blocking. But the timeout

makes sense in most QCoro cases, because they are combination of wait + the non-blocking operation. Let’s take

QIODevice::read() for example: the Qt version doesn’t have any timeout, because the call will never block - if

there’s nothing to read, it simply returns an empty QByteArray.

On the other hand, QCoroIODevice::read() is an asynchronous operation, because under to hood, it’s a coroutine

that asynchronously calls a sequence of

device->waitForReadyRead();

device->read();

Since QIODevice::waitForReadyRead() takes a timeout argument, it makes sense for QCoroIODevice::read()

to also take (an optional) timeout argument. This and many other operations have gained support for timeout.

QThreadIt’s been a while since I added a new wrapper for a Qt class, so QCoro 0.5.0 adds wrapper for QThread. It’s

now possible to co_await thread start and end:

std::unique_ptr<QThread> thread(QThread::create([]() {

...

});

ui->setLabel(tr("Starting thread...");

thread->start();

co_await qCoro(thread)->waitForStarted();

ui->setLabel(tr("Calculating..."));

co_await qCoro(thread)->waitForFinished();

ui->setLabel(tr("Finished!"));

.then() continuation for Task<T> (#39)QCoro::waitFor() getting stuck when coroutine returns synchronously (#46)Task<T> from all operations (#54)QThread (commit 832d931)Thanks to everyone who contributed to QCoro!

You can download QCoro 0.5.0 here or check the latest sources on QCoro GitHub.

If you are interested in learning more about QCoro, go read the documentation, look at the first release announcement, which contains a nice explanation and example or watch recording of my talk about C++20 coroutines and QCoro this years’ Akademy.